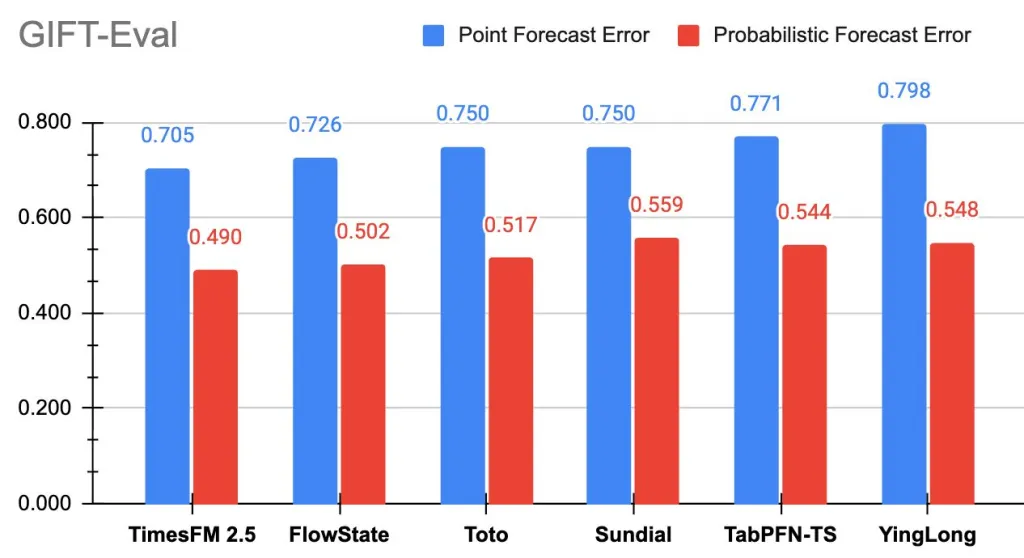

Google Research has released TimesFM-2.5, a 200M-parameter, decoder-only time-series foundation model with a 16K context length and native probabilistic forecasting support. The new checkpoint is live on Hugging Face. On GIFT-Eval, TimesFM-2.5 now tops the leaderboard across accuracy metrics (MASE, CRPS) among zero-shot foundation models.

What is Time-Series Forecasting?

Time-series forecasting is the practice of analyzing sequential data points collected over time to identify patterns and predict future values. It underpins critical applications across industries, including forecasting product demand in retail, monitoring weather and precipitation trends, and optimizing large-scale systems such as supply chains and energy grids. By capturing temporal dependencies and seasonal variations, time-series forecasting enables data-driven decision-making in dynamic environments.

What changed in TimesFM-2.5 vs v2.0?

- Parameters: 200M (down from 500M in 2.0).

- Max context: 16,384 points (up from 2,048).

- Quantiles: Optional 30M-param quantile head for continuous quantile forecasts up to 1K horizon.

- Inputs: No “frequency” indicator required; new inference flags (flip-invariance, positivity inference, quantile-crossing fix).

- Roadmap: Upcoming Flax implementation for faster inference; covariates support slated to return; docs being expanded.

Why does a longer context matter?

16K historical points allow a single forward pass to capture multi-seasonal structure, regime breaks, and low-frequency components without tiling or hierarchical stitching. In practice, that reduces pre-processing heuristics and improves stability for domains where context >> horizon (e.g., energy load, retail demand). The longer context is a core design change explicitly noted for 2.5.

What’s the research context?

TimesFM’s core thesis—a single, decoder-only foundation model for forecasting—was introduced in the ICML 2024 paper and Google’s research blog. GIFT-Eval (Salesforce) emerged to standardize evaluation across domains, frequencies, horizon lengths, and univariate/multivariate regimes, with a public leaderboard hosted on Hugging Face.

Key Takeaways

- Smaller, Faster Model: TimesFM-2.5 runs with 200M parameters (half of 2.0’s size) while improving accuracy.

- Longer Context: Supports 16K input length, enabling forecasts with deeper historical coverage.

- Benchmark Leader: Now ranks #1 among zero-shot foundation models on GIFT-Eval for both MASE (point accuracy) and CRPS (probabilistic accuracy).

- Production-Ready: Efficient design and quantile forecasting support make it suitable for real-world deployments across industries.

- Broad Availability: The model is live on Hugging Face.

Summary

TimesFM-2.5 shows that foundation models for forecasting are moving past proof-of-concept into practical, production-ready tools. By cutting parameters in half while extending context length and leading GIFT-Eval across both point and probabilistic accuracy, it marks a step-change in efficiency and capability. With Hugging Face access already live and BigQuery/Model Garden integration on the way, the model is positioned to accelerate adoption of zero-shot time-series forecasting in real-world pipelines.

Check out the Model card (HF), Repo, Benchmark and Paper. Feel free to check out our GitHub Page for Tutorials, Codes and Notebooks. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter.

Michal Sutter is a data science professional with a Master of Science in Data Science from the University of Padova. With a solid foundation in statistical analysis, machine learning, and data engineering, Michal excels at transforming complex datasets into actionable insights.