We all have used LLMs in different capacities for carrying out a multitude of tasks. But how often have you used it for something that is specific to your culture? That is where all that processing power hits a brick wall. The English-centric nature of most large language models makes it exclusive to an audience familiar with the language.

AI4Bharat is here to change that. Their latest offering Indic LLM-Arena aspired to provide an open-source ecosystem for Indian language AI. This article will serve as a guide for what Indic LLM-Arena offers and what its plans are for the future.

What is Indic LLM-Arena?

As the name suggests, Indic LLM-Arena is an indianized version of LMArena, the industry standard for LLM benchmarks. An initiative by AI4Bharat (IIT Madras), supported by Google Cloud, Indic LLM-Arena leaderboard is designed to benchmark LLMs on the three pillars that affect the Indian experience: language, context, and safety.

The Gaps in Current LLM Evaluation

The current leaderboards—while essential in gauging the progress in models—fail to capture the realities of our nation. The gap exists across the following dimensions:

1. The Language Gap

The gap isn’t merely due to a lack of support for vernacular languages. It’s also in part due to lack of understanding about Indic language communication and limited success in code-switching scenarios. Even the models trained specifically on the regional languages, fail to perform satisfactorily as soon as there isn’t a mono-linguistic prompt.

2. The Cultural Gap

India is not a monolith. There isn’t a one-size-fits-all, pan-india response. This is due to the multi-cultural and -ethinic environment that India fosters. A culturally-aware model would offer answers that are appropriate for the given language or region—a capability currently lacking in models.

3. The Safety & Fairness Gap

A model’s safety and fairness system needs to learn the kinds of risks that actually show up in India. That includes regional prejudices, communal misinformation, and the quieter ways caste stereotypes slip in. Off-the-shelf safety tests don’t capture these realities, so the training has to account for them directly.

How to Access?

You can access Indic LLM-Arena at their official chat interface: https://arena.ai4bharat.org/#/chat

Make sure to create an account, otherwise you’d be limited to the Random option in which you can only compare 2 models, one response per chat.

Hands-On: Testing the Interface

To get a grip over all that Indic LLM-Arena has to offer, we’d be putting to test the 3 primary modes that the sites operates on:

- Direct chat

- Compare models

- Random

You can toggle between the modes using the modes using the chat mode dropdown.

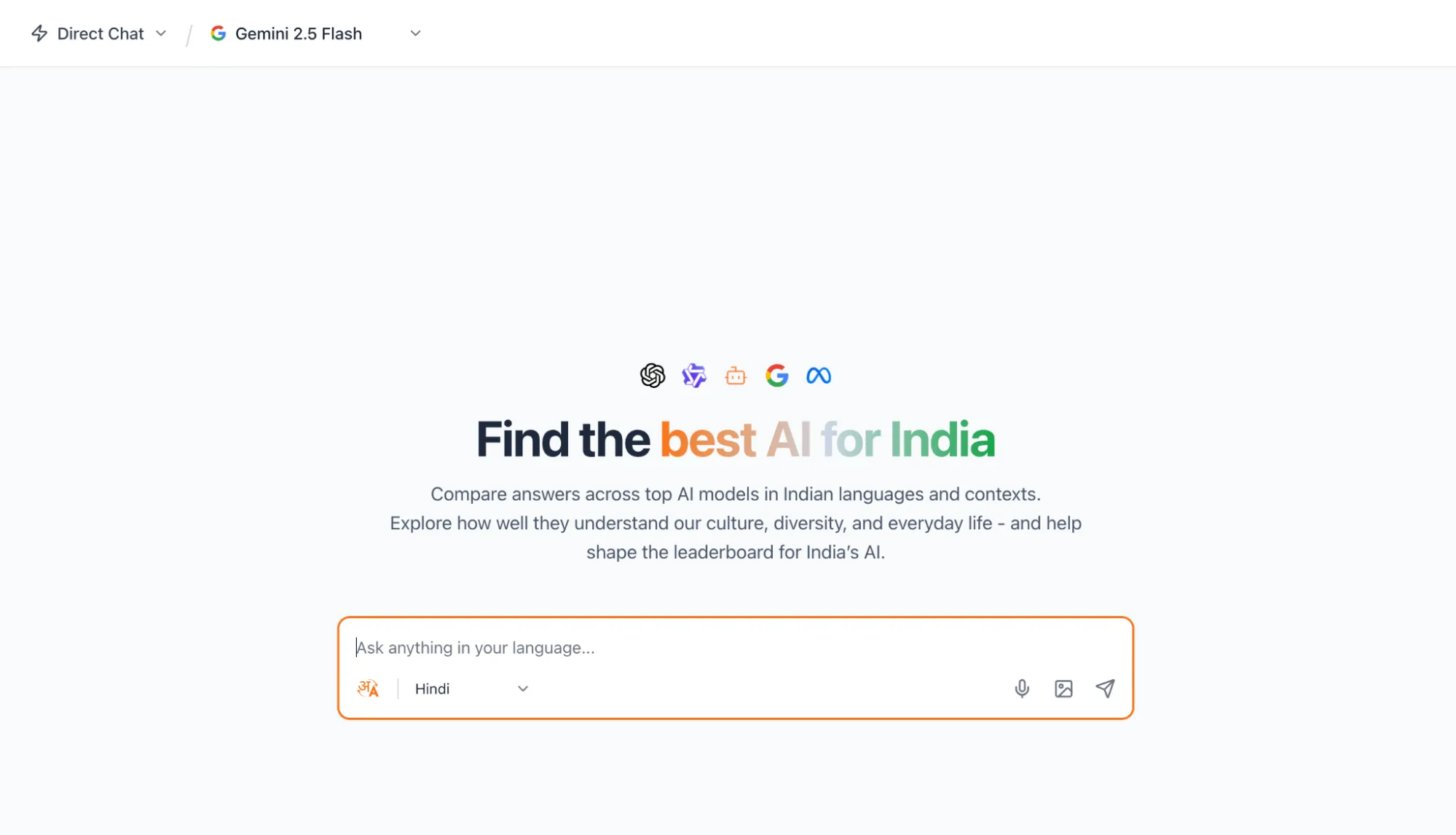

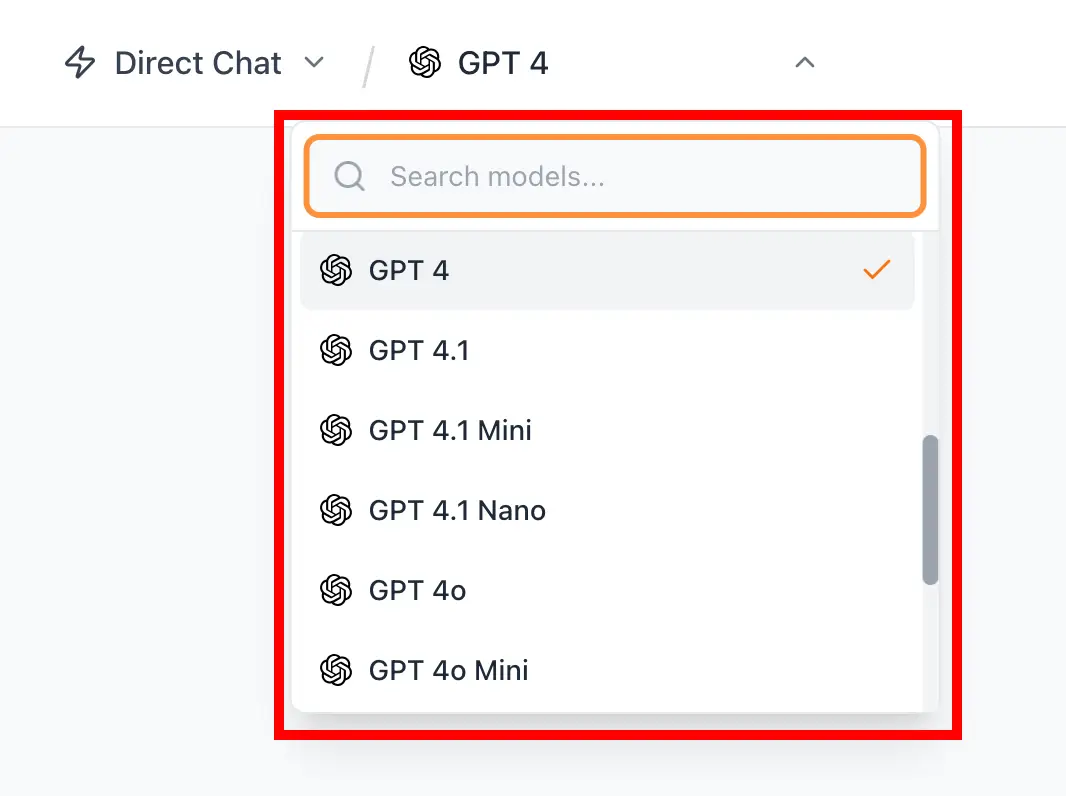

Direct Chat

For this test, I’d be giving a prompt in Hindi to see how well the model responds. I’ll ask the question “What does the name Vasu mean?” using the Gemini 2.5 Flash model.

Prompt: “वासु नाम का क्या अर्थ है”

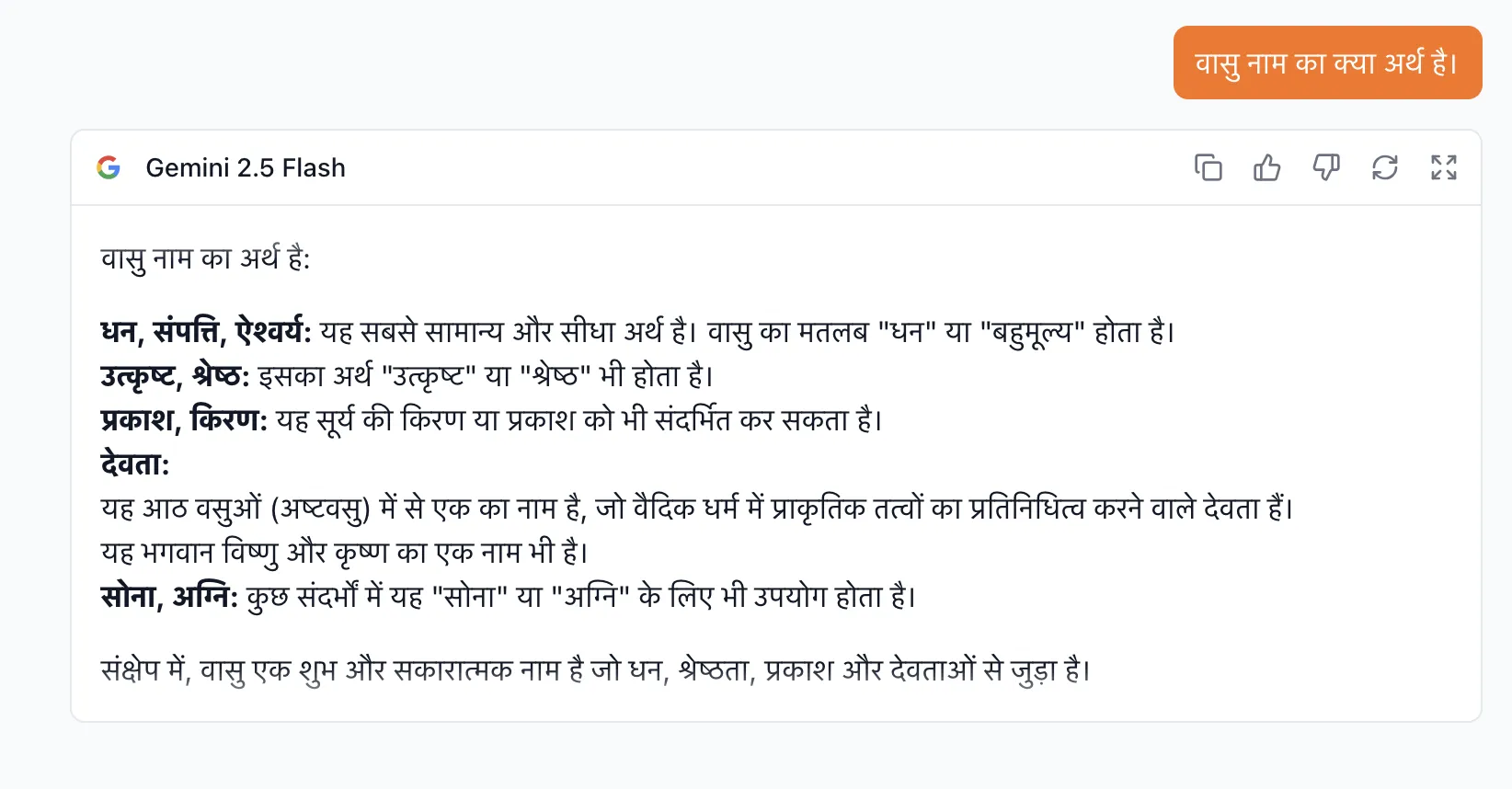

Response:

Review: Hopeful stuff indeed! The response provided was in plain Hindi, with appropriate text emphasis. The information provided is factually correct, as can be corroborated from the Wikipedia page of the name.

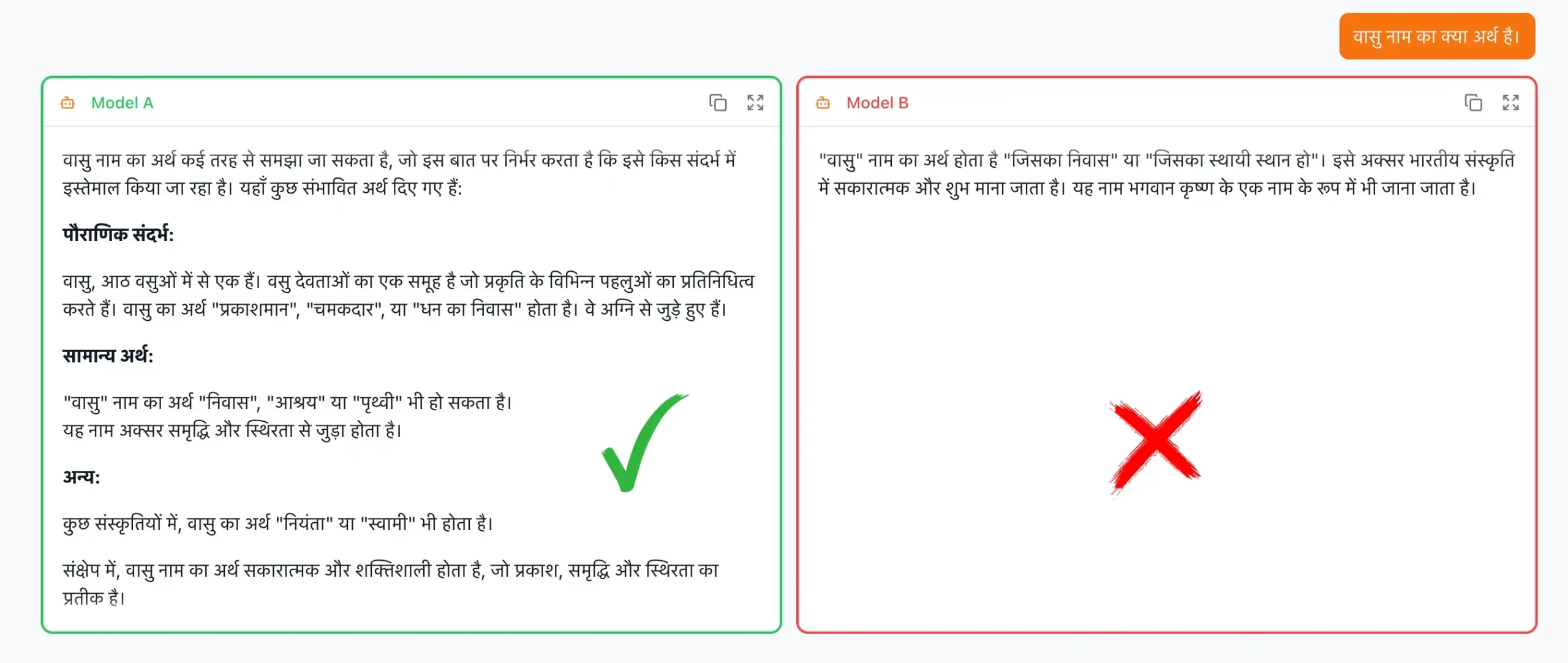

Compare Models

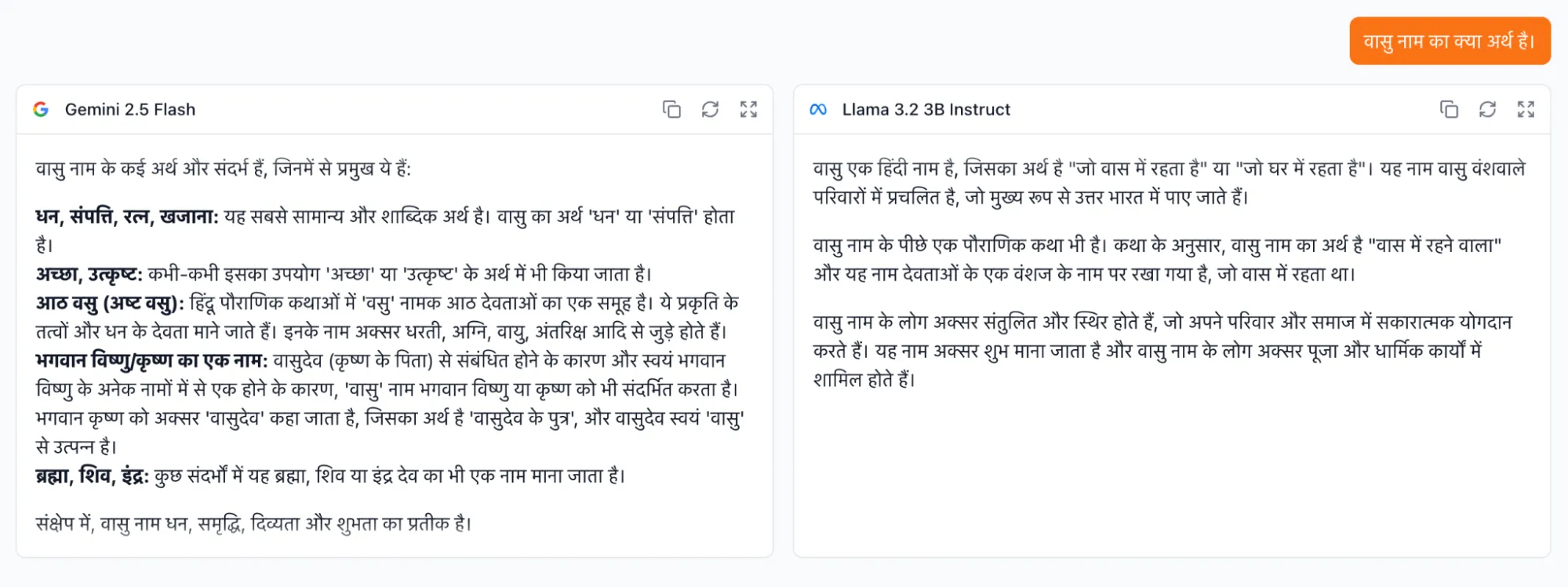

For this test, I’d be giving the same prompt as the one used in the previous task, to the models Gemini 2.5 Flash and Llama 3.2 3B Instruct.

Prompt: “वासु नाम का क्या अर्थ है”

Response:

Review: This one was intriguing. Now that we’re able to put two models in parallel, the response speeds are conspicuous. Gemini 2.5 flash was able to give the elaborate response in less than half the time it took for LLama 3.2 3B for the same. The responses were completely in Hindi.

Random

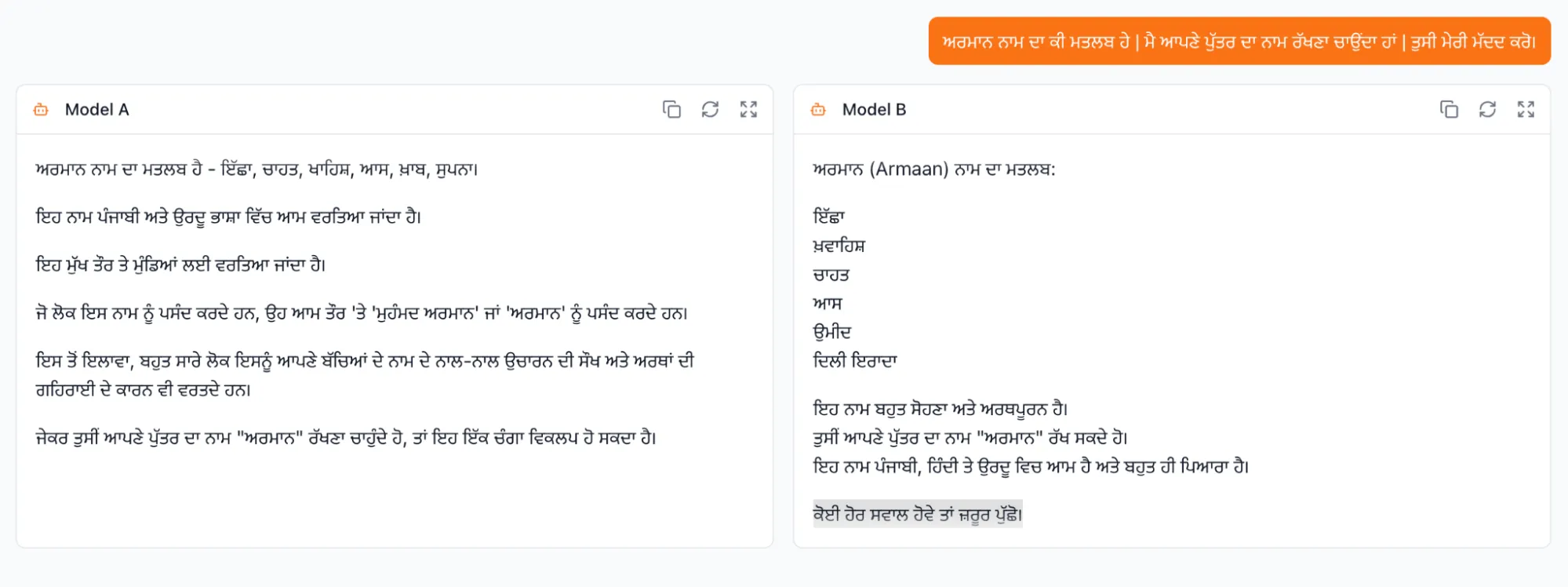

For this test, I’d be giving a prompt in Punjabi, to two models (completely unknown) to see how well they respond. I’ll ask the question “What does the name Armaan mean? I want to name my son Armaan. Please help me.”.

Prompt: “ਅਰਮਾਨ ਨਾਮ ਦਾ ਕੀ ਮਤਲਬ ਹੇ | ਮੈ ਆਪਣੇ ਪੁੱਤਰ ਦਾ ਨਾਮ ਰੱਖਣਾ ਚਾਉਂਦਾ ਹਾਂ | ਤੁਸੀ ਮੇਰੀ ਮੱਦਦ ਕਰੋ।”

Response:

Review: The response provided was in Punjabi and was factually correct based off the Wikipedia page of the name. The two models that responded took some time to frame the response completely. This could be attributed to the regional languages being a bit computation heavy than traditional english.

Verdict

The three modes of LLM-Arena offered sufficient variety to keep my interest. Whether it is model blind test, comparison between the favorites or just the regular prompt-response routine, the platform has a lot on display. I could tell the difference in the response times between English and vernacular queries. This goes to further highlight the struggles of traditional LLMs in processing Indian languages. LLM-Arena provides a unified platform for testing of the newer models as well as a leaderboard for the best models.

But LLM-Arena isn’t without its flaws. Here are some problems that I faced while using it:

- Context-Less transliteration: Transliteration, while being an amazing feature in itself, lacks context and has some latency. This makes it hard to write code-switched queries, as the model has a hard time realizing vernacular language (that we had selected) with loan words (like ChatGPT):

- Lack of model representation: The models offered as of now are different variants of 3 LLMs namely: ChatGPT(10), Gemini(5), Qwen(1), Meta(3). There are two problems with this:

- A lot of the heavy hitters like DeepSeek, Claude, and many more aren’t available.

- Local LLMs like Sarvam-1 which are language models specifically optimized for the Indian language haven’t had a representation.

- UI Problems: The UI isn’t without its flaws. I encountered the following issue, while using the UI:

The Future

LLM-Arena is an open-letter to people wanting to improve the proficiency of models in dealing with languages of India. As mentioned by the company, the leaderboard is being curated, as more and more data is being provided to them by users like us. So, we could assist in this process by offering two cents about our own personal experiences of using these models. This would assist in the fine-tuning of these models, and in-turn make the models more accessible to people across the country.

The requirement of English is soon to be obviated, as initiatives such as Indic LLM-Arena come to the picture. While addressing localized challenges, providing alternatives to established names, and voicing regional concerns, it’s a step in the right direction towards making AI more accessible and personalized.

Cast Your Vote

Indic LLM-Arena is solely dependent on the feedback of its users: Us! To make it the platform it aspires to be and to push the envelop when it comes to Indianized LLMs, we have to provide our inputs to the site. Visit their official page to contribute.

Also Read: Top 10 LLM That Are Built In India

Frequently Asked Questions

A. It tests how well models handle Indian languages, cultural context, and safety concerns, giving a more realistic picture of performance for Indian users.

A. Direct Chat lets you test a single model, Compare Models shows side-by-side responses, and Random offers blind comparisons without knowing which model replied.

A. Some major models are missing, transliteration can lag, and a few interface issues still show up, though the platform is actively improving.

Login to continue reading and enjoy expert-curated content.