Since the first release of GPT-1 in 2018 to the latest release of GPT-5 in 2025, generative AI has created a revolution. From a capacity of 4,000 words in the earlier models to millions in the latest release, each model builds upon the previous one. The premise of Gen AI is based on user-provided input and AI-generated output. However, GenAI still faces clear limits: it handles only digital tasks, relies on training data, and can hallucinate. Agentic AI workflow aims to provide a solution to this by creating many small agents that can make decisions based on a variety of factors. In this article, I will deep dive into the various reasons behind the rise of the Multi-Agent workflow.

What are AI Agents

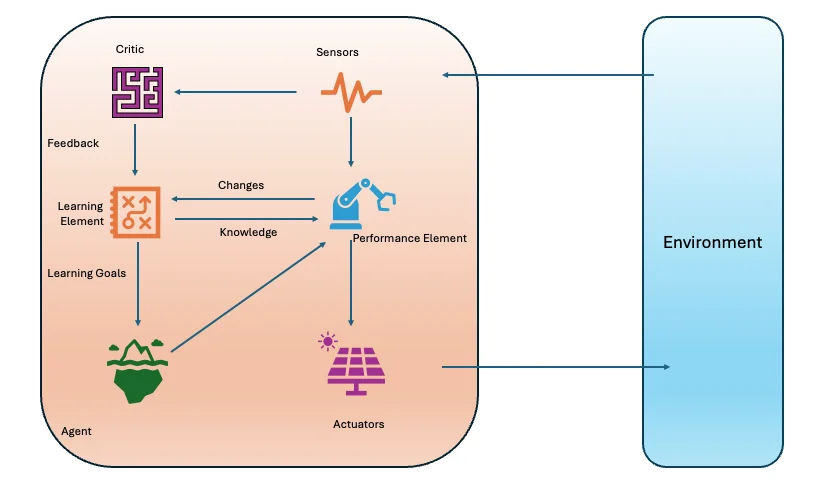

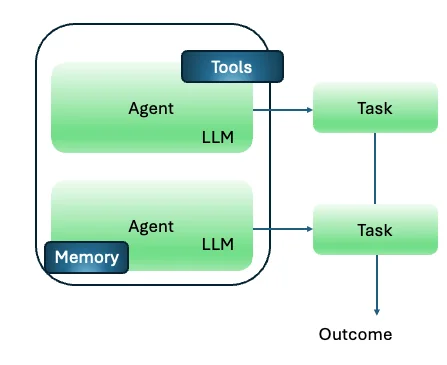

Generative AI, as the name suggests, is the generative foundation of AI. Based on this foundation, a new class of agents, known as AI agents, emerged. These agents use large language models, function calling, and a multi-step process to run a multi-agent workflow to produce results.

Types of AI Agents

1. Simple Reflex Agents:

This is the most basic agent and is stateless in nature. E.g., A thermostat increases the heat if the temperature falls below 60°F. They are prone to mistakes as they do not store information

2. Model-Based Reflex Agents:

These models can track the past states to make decisions. E.g., the same thermostat makes the same decision, but based on time of day and user preferences, to adjust the temperature

3. Goal-Based Agents:

These models set goals and take actions that make them achieve those goals. These are widely used in robotics, where task completion is the goal

4. Utility-Based:

These agents assign utility scores to multiple possible outcomes and, based on the overall utility score, choose a certain action. E.g., A stock trading bot would look at risk, returns, ratings, and other aspects before suggesting the investments

5. Learning Agents:

These agents improve over time by gaining more knowledge from new experiences and data.

Read more: Different types of AI Agents

Challenges of AI Agents

- Causality: Single-agent systems operate in isolation and often confuse correlation with causation. For example, an LLM agent may see patient visits rise as an illness spreads, but can’t tell which drives which.

- Scaling: A single AI agent can’t scale beyond its core skill set, so it struggles to achieve objectives outside that scope.

- LLM limits: LLMs still hallucinate and struggle to adapt to unfamiliar or shifting situations. This can lead to disastrous consequences in critical industries like medical research.

- Reliability & Safety Concerns: Without any formal verification of the AI’s response, the reliability of AI agents is risky on critical infrastructure.

These challenges highlight why a single agent is often insufficient – paving the way for a multi-agent workflow.

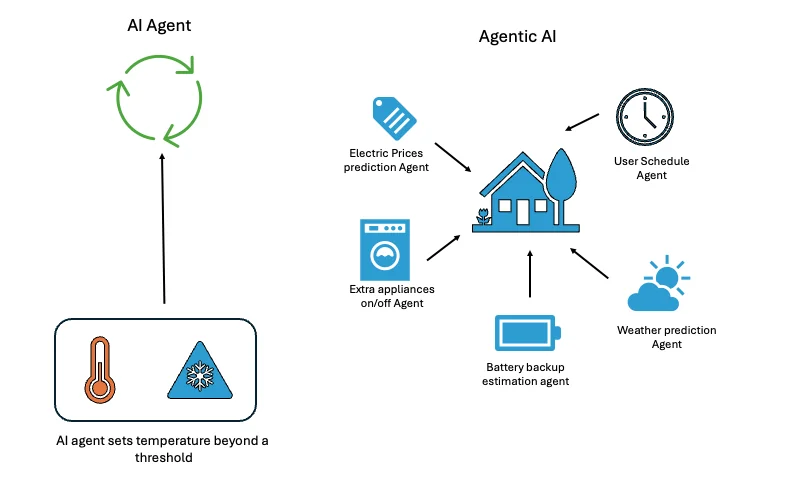

Agentic AI

Generative AI has evolved remarkably over the last few years from simple rule-based systems to sophisticated models capable of handling not only text but also images, audio, and video.

With the inclusion of audio & video, the capabilities for AI have increased to critical industries like medical research, where disease can be identified by AI with MRI, X-rays, and CT Scan images. The integration of agent-based AI into organizational processes has shown a productivity increase of up to 40%.

Although generative AI has already started transforming the way industries automate certain tasks that would need hours of work, the question arises as to where we go from here. With further research and updates over the next few years, the AI agents would overcome some of the limitations suggested earlier.

However, the complexity of processes across organizations is so high that an AI agent by itself cannot accomplish the results. e.g., in a procurement firm, from purchase requisition to payment processing, there are steps like approval, purchase order, goods/services delivery, and finally invoice creation and payment.

For AI to work successfully, an agent must be deployed at each stage and would need an expert human to intervene. This is where Agentic AI can bridge the gap.

Agentic AI distributes tasks among chained AI agents. Each agent completes its objective and passes the result to the next, much like a relay race.

A comparison of commercial Agentic AI solutions

LangGraph

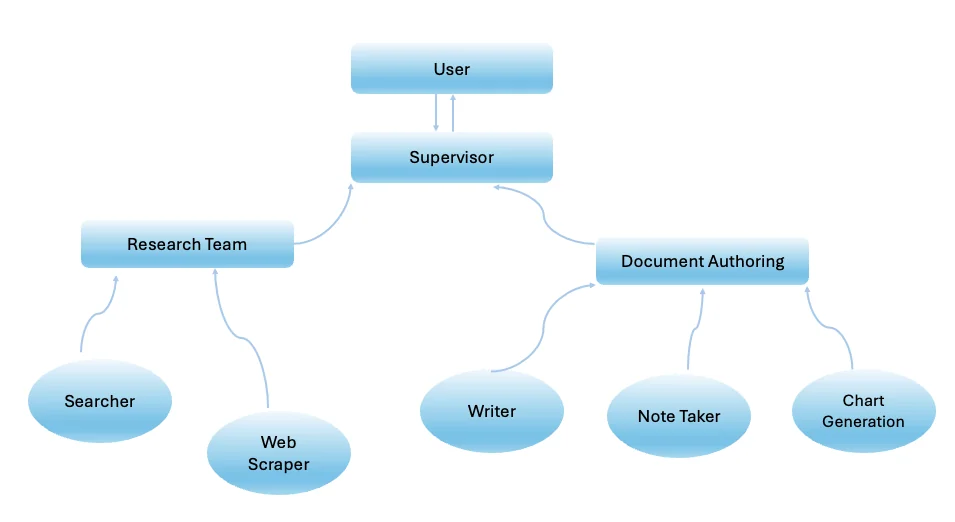

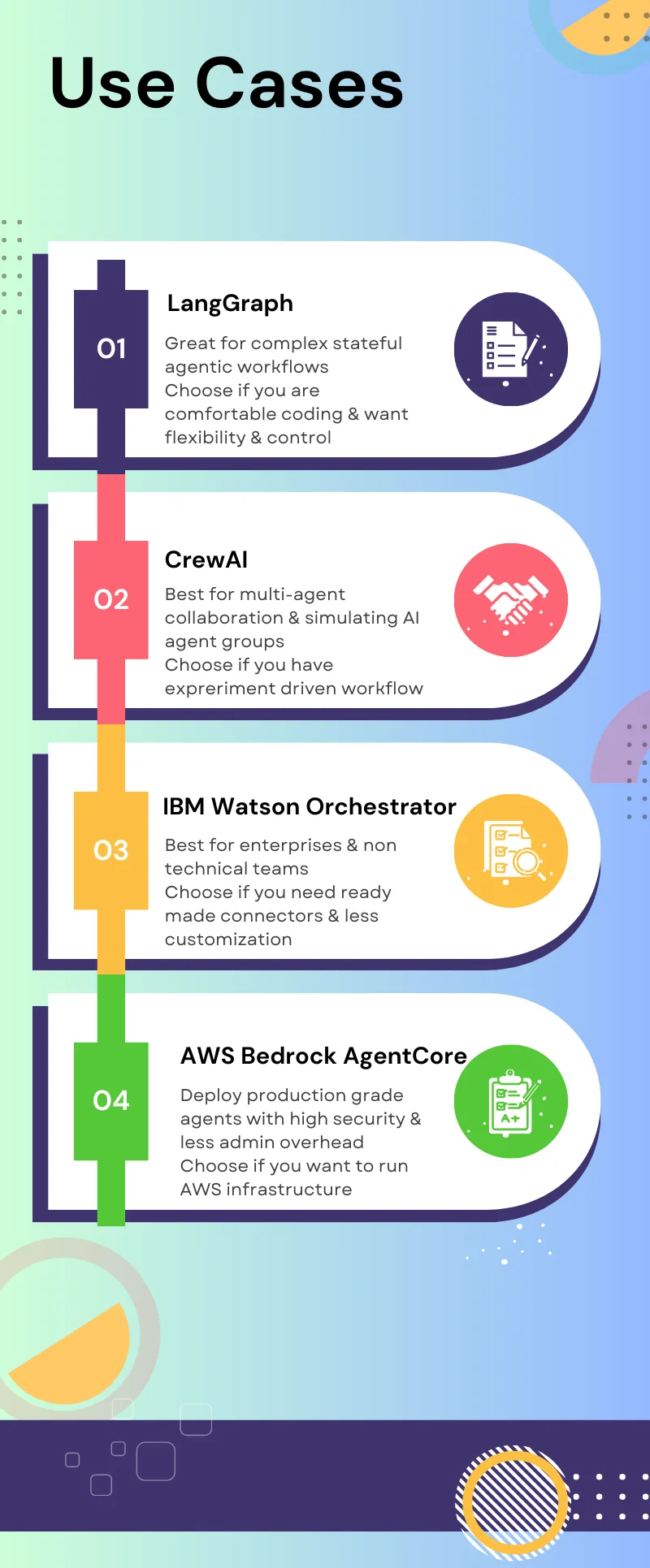

Created by LangChain, LangGraph is built around a graph model for orchestrating long-running stateful agents. It targets developers and teams building custom & stateful agents who want a higher level of autonomy in their code.

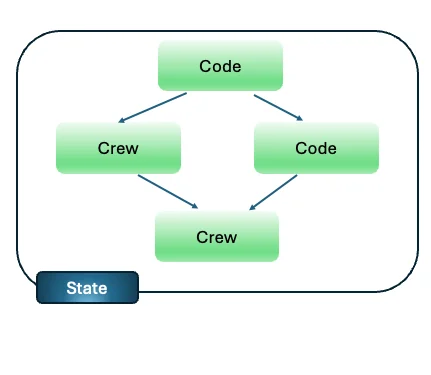

In LangGraph, each AI agent is a node, and each node is connected to each other via a connection, and each connection is used for communication.

These agents can be cyclical or hierarchical in nature, with one supervisor’s node (agent) managing the runtime.

The LangGraph model has some key advantages over other models

- Group-Based Workflows: Workflows are like graphs that support conditional logic as well as hierarchical/cyclic flows

- Stateful execution: Supports short- and long-term memory across sessions

- Human in the loop & real-time feedback: Allows for human intervention at various points in the workflow

- Error handling & debugging tools: A visual IDE that shows errors, states, and transitions, and various visualizations

With the above, LangGraph also has its limitations

- Complexity: The multi-agent workflow can get complicated quickly with increasing nodes in the graph

- Performance: The increasing nodes in the graph can cause latency issues, along with the need for constant manual intervention for optimization

- Limited Integrations: Compared to other solutions in the market, there is a lack of prebuilt connectors for APIs, and thus, manual integration

CrewAI

CrewAI is another multi-agent system built on a Python framework where agents take a variety of roles.

Crew AI framework includes an organization of agents with specialized roles, and each works together to finish the objectives

CrewAI has two main offerings

- CrewAI Crews: These are optimized for collaboration and work like creating teams where each agent has a role to play

- CrewAI Flows: This follows an event-driven architecture where an LLM calls for task orchestration and can call each crew specifically

Like LangGraph, CrewAI has its own advantages

- Flexible Workflow: Allows mixing flows and crews depending on the needs of the model

- Scalable: Flows and crews can be modified, and the agents can be increased/decreased

- Open Source & Self-Hosting: Can be run on own infrastructure

- Error handling: Gives a UI for errors, debugging agent behavior, and workflows

The following are its limitations:

- High technical know-how: The requirement of Python coding skills means that non-technical users would need developer support

- Lack of Scalability: Scaling workflows to enterprise-grade might be a challenge with latency issues and coordination requirements

- Security & Compliance: With CrewAI being open source, it does not natively provide enterprise-grade security

- New Ecosystem: Since CrewAI is relatively new, the documentation and resources are somewhat limited

IBM Watson

IBM Watson has a host of AI tools, among which multi-agent orchestration enables chaining multiple agents together.

This is an AI-powered digital assistant that can automate routine tasks using Natural Language Processing.

Compared to other platforms, this is more of a packaged product in terms of pre-written code and has use cases across customer service, HR, Procurement, and sales.

IBM Orchestrate provides the following benefits:

- AI agent builder: A tool that allows you to create generative AI agents without writing code and with minimal configuration

- Agent Catalog: Library of IBM Watson X agents that allow search by domain, use cases, or applications.

- Pre-built AI agents: Allows you to skip setting up AI agents and lets you choose prebuilt AI agents

IBM orchestrate has the following limitations as well

- IBM Ecosystem: Agentic workflows are tied to IBM services. Migrating to other platforms can be a challenge

- Cost Structure: IBM solutions are generally expensive, with licensing and long-term commitments

- Low customizability: Since the Agents are packaged by IBM, there are limited customization options for developer teams

- Less dynamic: The focus of IBM orchestrate is more around task completion and less on collaboration

Amazon Bedrock AgentCore

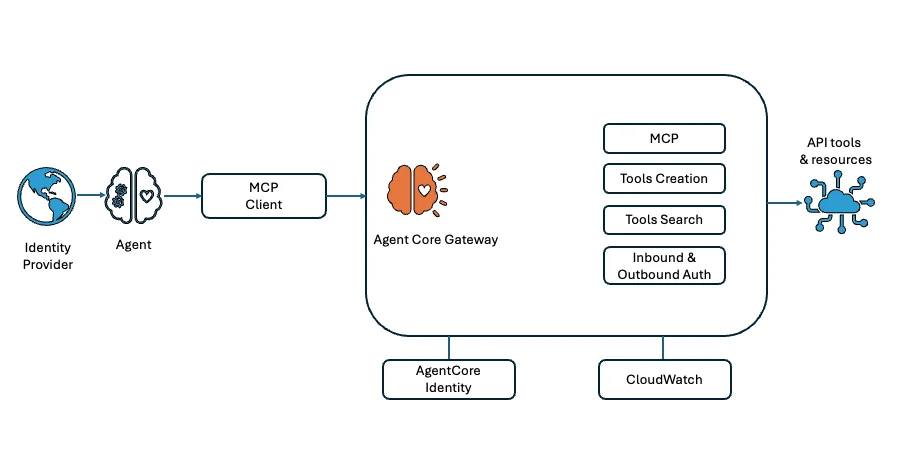

Amazon Bedrock AgentCore is a fully managed AWS service that enables users to deploy and manage AI agents at scale. It can be used standalone or with other frameworks like CrewAI, LangGraph, or Strands Agents (an open-source SDK released by AWS).

AgentCore is modular in nature, i.e., you can use parts independently or together depending on your needs.

Key Components of AgentCore are:

- Agent Core Runtime: this handles inputs, context, and invocations and hosts agent/tools in a serverless, secure environment

- AgentCore Identity: Manages authentication and authorization for agents and users. Integrated with modern OAuth 2.0, Okta, IAM, and API keys

- AgentCore Memory: This provides stateful interactions, like in-session context, as well as user preferences, facts, checkpoints, etc.

- AgentCore Gateway: This helps in integrating internal and external services with agent-compatible tools.

- Code Interpreter & Browser Tool: Enables running code in a sandbox environment and enables a serverless browser runtime to interact with web content in isolation.

The key benefits of AgentCore are:

- Enterprise Grade Security: Since agents are within AWS infrastructure, this is highly secure with built-in authentication

- Model Flexibility: Since any agent framework (LangGraph, CrewAI, or strands) can be used, this is highly customizable

- Stateful Architecture: Keeps short-term and long-term memory context

- Scalable: Since this is a serverless architecture, it’s easy to scale out or in depending on requirements

The limitations of Amazon Bedrock AgentCore are:

- AWS Ecosystem: While this is good for enterprise-level security, it can make it harder to run AgentCore outside AWS infrastructure

- Pay as you go: AgentCore is fully managed by AWS; therefore, it can get expensive as usage grows across the organization

- Less Control: Since AgentCore is fully managed by AWS, you do not see the underlying infrastructure and hence have low customizability

- Relatively New: AgentCore is AWS’s latest offering and would see significant improvements and changes over the next releases

The following graphic summarizes the use cases for each of the four Agentic AI platforms in the market

Conclusion

Although GenAI has been a game-changer for intelligent automation, its limitations have opened many other doors. One of these is multi-agent workflows, which breaks down the large processes into coordinated multi-agents that can reason, react, and adapt.

The Agentic AI workflows can transform how work is done across industries. In the coming years, we’ll likely see a convergence of open-source flexibility with enterprise-grade systems.

As organizations begin to adopt Agentic AI workflows, the future of AI is not about generating responses – it’s about coordinated AI agents that work in tandem to deliver outcomes at scale.

Frequently Asked Questions

A. Gen AI is a type of artificial intelligence that takes user input in the form of text, images, video or audio and creates new information and is interactive

A. An AI agent is a code written in a programming language like Python and follows a fixed set of instructions and can make decisions based on the environment with no input from humans

A. Agent Chaining refers to an agent performing a task and transferring the result to another agent who in turn uses that input to perform another task and passes the output to next.

Login to continue reading and enjoy expert-curated content.